Have you ever wondered what is the difference between the .NET “Decimal” data type and the familiar “float” or “double”? Ever wonder when you should one versus the other? In order to answer these questions, take a look at the following C# code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace IEEE_Floating_Point_Problems

{

class Program

{

static void Main(string[] args)

{

int iteration_num = 1;

Console.WriteLine("First loop, using float type:");

// runs only four times instead of the expected five!

for(float d = 1.1f; d <= 1.5f; d += 0.1f)

{

Console.WriteLine("Iteration #: {0}, float value: {1}", iteration_num++, d.ToString("e10"));

}

Console.WriteLine("\r\nSecond loop, using Decimal type:");

// reset iteration count

iteration_num = 1;

// runs correctly for five iterations

for(Decimal d = 1.1m; d <= 1.5m; d += 0.1m)

{

Console.WriteLine("Iteration #: {0}, Decimal value: {1}", iteration_num++, d.ToString("e10"));

}

Console.WriteLine("Press any key to continue...");

Console.ReadKey();

}

}

}

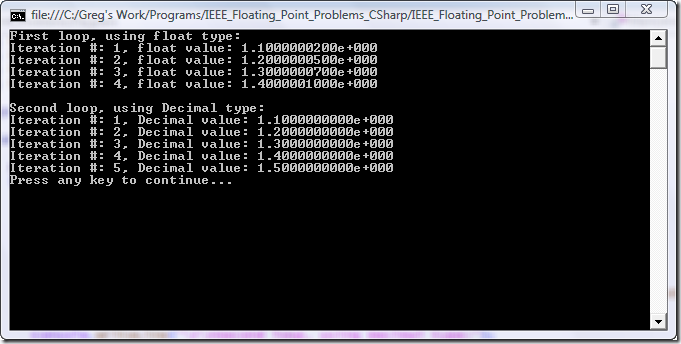

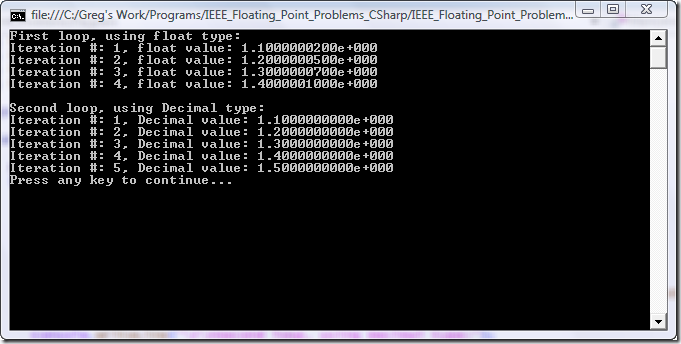

Here is what the output looks like:

At first glance, looking at the code and not the output, it seems like the first for() loop should run for five iterations. After all, there are five values from 1.1 up to and including 1.5 stepping by 0.1 (i.e. 1.1, 1.2, 1.3, 1.4, and 1.5). But in reality, the loop only runs through four iterations. Why is this? Also, why was 1.10000002 assigned as the first value of “d” instead of the hard-coded 1.1? The reason is simple – we’re working on hardware that uses binary floating point representation as opposed to decimal representation. Binary floating point is really an approximation of the true decimal number because it is base two (binary) instead of base 10 (decimal).

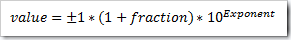

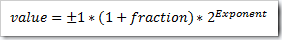

In order to understand this better, we’ll take the common (IEEE 754) floating point formula but use base 10 instead of two:

Filling in the variables to represent a value of 1.1 we get:

+1 * (1 + 0.1) * 10^0 =

(1 + 0.1) * 10^0 =

1.1 * 10^0 =

1.1 * 1 = 1.1 <— Exactly the correct value

In the real base two version everything is the same except 10 changes to a two:

If you try to fill in this equation, you’ll immediately see the problem when converting 0.1 (the fraction part) into binary. Let’s do it here:

-

0.1 x 2 = 0.2; so the binary digit is 0

-

0.2 x 2 = 0.4; so the binary digit is 0

-

0.4 x 2 = 0.8; so the binary digit is 0

-

0.8 x 2 = 1.6; so the binary digit is 1

-

0.6 x 2 = 1.2; so the binary digit is 1

-

0.2 x 2 = 0.4; so the binary digit is 0

-

0.4 x 2 = 0.8; so the binary digit is 0

-

0.8 x 2 = 1.6; so the binary digit is 1

-

0.6 x 2 = 1.2; so the binary digit is 1

-

0.2 x 2 = 0.4; so the binary digit is 0

-

0.4 x 2 = 0.8; so the binary digit is 0

-

0.8 x 2 = 1.6; so the binary digit is 1

-

0.6 x 2 = 1.2; so the binary digit is 1

-

and so on…

We end up with “0001100110011…” where the four digits at the end (0011) repeat forever. Therefore, it’s impossible to represent 0.1 with an exact binary number. If we can’t represent 0.1 exactly, then the rest of the equation will not evaluate precisely to 1.1; rather, it will be slightly more or slightly less depending on how many bits of precision you have available. This explains why the hard-coded “1.1” value changed slightly once assigned to the “d” variable. It can never be exactly 1.1 because the hardware is incapable of representing it.

These small precision errors get compounded in the first loop as 0.1 is added to “d” after each iteration. By the fifth time around “d” is slightly greater than 1.5 causing the loop to exit (the value of 1.5 can be represented exactly in binary and is not approximated). Therefore only four iterations are run instead of the expected five.

The .NET Decimal Type

So what’s the deal with this .NET “Decimal” type? It is simply a floating point type that is represented internally as base 10 instead of base two. Obviously with base 10 (our real-world numbering system) any decimal number can be constructed to the exact value without approximating. This is why the second for() loop runs for the expected five iterations and the variable “d” always has the exact hard-coded value assigned to it.

The Decimal type is really a struct (in C# and MC++) that contains overloaded functions for all math and comparison operations. In other words, it’s really a software implementation of base 10 arithmetic.

Which Type Should I Use?

Since Decimal types are perfectly accurate and float’s are not, why would we still want to use the intrinsic float/double types? Short answer – performance. In my speed tests Decimal types ran over 20 times slower than their float counterparts.

So if you’re writing a financial application for a bank that has to be 100% accurate and performance is not a consideration, use the Decimal type. On the other hand, if you need performance and extremely small floating point variations don’t affect your program, stick with the float and double types.

Other Considerations

Another thing the Decimal type can do that the float and double types cannot is encode trailing zero’s (note: there are some base two architectures, non-Intel, that can encode trailing zero’s – but those are out of the scope of this article). For example, there is a difference between 7.5 and 7.50 in the Decimal type, but there is no way to represent this in a standard float/double. Let’s look at another example – check out the following MC++ code:

#include "stdafx.h"

#include <stdio.h>

using namespace System;

int main(array<System::String ^> ^args)

{

double number = 1.23+1.27;

Console::WriteLine("double: {0}", number);

Decimal decimal = (Decimal)1.23+(Decimal)1.27;

Console::WriteLine("decimal: {0}", decimal);

Console::WriteLine("Press any key to continue...");

Console::ReadKey();

return 0;

}

The first part that uses a double outputs 2.5, but the second one that uses a Decimal outputs 2.50 – we didn’t even have to specify a format string in order to get that trailing zero. This could be very useful in applications that deal with dollar amounts.

More Information

If you want to get more information regarding binary floating point versus decimal floating point, see this awesome FAQ by IBM:

http://www2.hursley.ibm.com/decimal/decifaq.html

Conclusion

I hope this has shed some light on the differences between the .NET Decimal type and the standard float/double types. If you have any questions or notice any typos in this article, please email me through my Contact page:

https://gregs-blog.com/contact

Thanks for reading! 🙂

-Greg Dolley